Leaders In AI Infra

Leaders In AI Infra

We manage GPU infrastrucure to run AI workloads

✨

✨

✨

✨

Our process

On

Subscription

Basic

Pro

Custom

On

Subscription

Basic

Pro

Custom

On

Subscription

Basic

Pro

Custom

01. AI Infrastructure Expertise

We procure, deploy, operate, and maintain complex AI infrastructure of GPUs, high-speed fabric, and high-power equipment.

02. Platform

connected

Through strategic partnerships with platform companies that drive demand for AI workloads, you can start generating revenue on investment immediately.

Chia

FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection />

<FeatureSection />

<Footer />

</div>

);

}

export default App;

``'

import React from 'react';

import { Header, Footer, HeroSection, FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection

title="Welcome to Nebula"

subtitle="A modern website template for showcasing your content"

buttonLabel="Learn More"

buttonLink="/about'

Chia

FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection />

<FeatureSection />

<Footer />

</div>

);

}

export default App;

``'

import React from 'react';

import { Header, Footer, HeroSection, FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection

title="Welcome to Nebula"

subtitle="A modern website template for showcasing your content"

buttonLabel="Learn More"

buttonLink="/about'

Chia

FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection />

<FeatureSection />

<Footer />

</div>

);

}

export default App;

``'

import React from 'react';

import { Header, Footer, HeroSection, FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection

title="Welcome to Nebula"

subtitle="A modern website template for showcasing your content"

buttonLabel="Learn More"

buttonLink="/about'

03. Blockchain enhanced

We have strong partnerships with web3 companies for seamless payment rails, tokenization, and workload generation.

Bandwidth

Latency

Security

Bandwidth

Latency

Security

Bandwidth

Latency

Security

04. Network Expertise

AI models move significant amounts of data across the infrastructure, so we reduce GPU idle time by building H100 clusters with high-speed InfiniBand and Ethernet.

05. Sustainable,

Efficient Computing

Through collaborations with leaders in immersion and liquid cooling, plus expertise in recertified equipment, we reduce both operational carbon emissions and embodied carbon impact while lowering OpEx.

Our services

Our services

NVIDIA DGX B200

NVIDIA H100 HGX

AMD MI300X

NVIDIA DGX B200

NVIDIA H100 HGX

AMD MI300X

We build out AI infra

We automate your workflows by connecting your favorite application, boosting efficiency and enhancing productivity.

NVIDIA DGX B200

NVIDIA H100 HGX

AMD MI300X

We build out AI infra

We automate your workflows by connecting your favorite application, boosting efficiency and enhancing productivity.

Docker Container

NVIDIA NIM Deployed

Deploy AI Workload

Docker Container

NVIDIA NIM Deployed

Deploy AI Workload

Host them on our partners

We partner with the largest AI workload platforms to drive massive demand to VC backed startups that are looking for GPU compute.

Docker Container

NVIDIA NIM Deployed

Deploy AI Workload

Host them on our partners

We partner with the largest AI workload platforms to drive massive demand to VC backed startups that are looking for GPU compute.

+15%

+15%

Sustainable Returns

GPU on-demand compute is a high-growth market driven by AI workloads. Own high-demand GPUs, earn sustainable returns and see exactly what you're paying for with our open, transparent total cost of ownership.

+15%

Sustainable Returns

GPU on-demand compute is a high-growth market driven by AI workloads. Own high-demand GPUs, earn sustainable returns and see exactly what you're paying for with our open, transparent total cost of ownership.

Our process

On

Subscription

Basic

Pro

Custom

On

Subscription

Basic

Pro

Custom

01. AI Infrastructure Expertise

We procure, deploy, operate, and maintain complex AI infrastructure of GPUs, high-speed fabric, and high-power equipment.

02. Platform connected

Through strategic partnerships with platform companies that drive demand for AI workloads, you can start generating revenue on investment immediately.

Chia

FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection />

<FeatureSection />

<Footer />

</div>

);

}

export default App;

``'

import React from 'react';

import { Header, Footer, HeroSection, FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection

title="Welcome to Nebula"

subtitle="A modern website template for showcasing your content"

buttonLabel="Learn More"

buttonLink="/about'

Chia

FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection />

<FeatureSection />

<Footer />

</div>

);

}

export default App;

``'

import React from 'react';

import { Header, Footer, HeroSection, FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection

title="Welcome to Nebula"

subtitle="A modern website template for showcasing your content"

buttonLabel="Learn More"

buttonLink="/about'

03. Blockchain enhanced

We have strong partnerships with web3 companies for seamless payment rails, tokenization, and workload generation.

Bandwidth

Latency

Security

04. Network Expertise

AI models move significant amounts of data across the infrastructure, so we reduce GPU idle time by building H100 clusters with high-speed InfiniBand and Ethernet.

05. Sustainable, Efficient Computing

Through collaborations with leaders in immersion and liquid cooling, plus expertise in recertified equipment, we reduce both operational carbon emissions and embodied carbon impact while lowering OpEx.

Our services

NVIDIA DGX B200

NVIDIA H100 HGX

AMD MI300X

NVIDIA DGX B200

NVIDIA H100 HGX

AMD MI300X

We build out AI infra

We automate your workflows by connecting your favorite application, boosting efficiency and enhancing productivity.

Docker Container

NVIDIA NIM Deployed

Deploy AI Workload

Docker Container

NVIDIA NIM Deployed

Deploy AI Workload

Host them on our partners

We partner with the largest AI workload platforms to drive massive demand to VC backed startups that are looking for GPU compute.

+15%

+15%

Sustainable Returns

GPU on-demand compute is a high-growth market driven by AI workloads. Own high-demand GPUs, earn sustainable returns and see exactly what you're paying for with our open, transparent total cost of ownership.

Our Data Centers

Our Data Centers

2024

Sacramento

2024

Wyoming

2025

Coming Soon - Edge

2024

Sacramento

2024

Wyoming

2025

Coming Soon - Edge

2024

Sacramento

2024

Wyoming

2025

Coming Soon - Edge

2024

Sacramento

2024

Wyoming

2025

Coming Soon - Edge

Plans to suit your needs

Plans to suit your needs

L40S

Power efficient inference

Experience breakthrough multi-workload performance with the NVIDIA L40S GPU.

Combining powerful AI compute with best-in-class graphics and media acceleration, the L40S GPU is built to power the next generation of data center workloads.

From generative AI and large language model (LLM) inference and training, to 3D graphics, rendering, and video.

L40S

Power efficient inference

Experience breakthrough multi-workload performance with the NVIDIA L40S GPU.

Combining powerful AI compute with best-in-class graphics and media acceleration, the L40S GPU is built to power the next generation of data center workloads.

From generative AI and large language model (LLM) inference and training, to 3D graphics, rendering, and video.

H100 HGX

Training Optimized

The NVIDIA® H100 Tensor Core GPU delivers unprecedented acceleration to power the world’s highest-performing elastic data centers for AI, data analytics, and high-performance computing (HPC) applications.

32 PFLOPS of FP8 AI performance, 640GB HBM3 at 27TB/s.

NVLink - fully connected topology from NVSwitch enables any H100 to talk to any other H100 concurrently at bidirectional speed of 900 gigabytes per second (GB/s).

H100 HGX

Training Optimized

The NVIDIA® H100 Tensor Core GPU delivers unprecedented acceleration to power the world’s highest-performing elastic data centers for AI, data analytics, and high-performance computing (HPC) applications.

32 PFLOPS of FP8 AI performance, 640GB HBM3 at 27TB/s.

NVLink - fully connected topology from NVSwitch enables any H100 to talk to any other H100 concurrently at bidirectional speed of 900 gigabytes per second (GB/s).

NVIDIA DGX B200

The foundation for your AI center of excellence

Equipped with eight NVIDIA Blackwell GPUs interconnected with fifth-generation NVIDIA® NVLink®, DGX B200 delivers leading-edge performance, offering 3X the training performance and 15X the inference performance of previous generations.

Leveraging the NVIDIA Blackwell GPU architecture, DGX B200 can handle diverse workloads—including large language models, recommender systems, and chatbots.

Unified AI platform for develop-to-deploy pipelines for businesses of any size at any stage in their AI journey.

NVIDIA DGX B200

The foundation for your AI center of excellence

Equipped with eight NVIDIA Blackwell GPUs interconnected with fifth-generation NVIDIA® NVLink®, DGX B200 delivers leading-edge performance, offering 3X the training performance and 15X the inference performance of previous generations.

Leveraging the NVIDIA Blackwell GPU architecture, DGX B200 can handle diverse workloads—including large language models, recommender systems, and chatbots.

Unified AI platform for develop-to-deploy pipelines for businesses of any size at any stage in their AI journey.

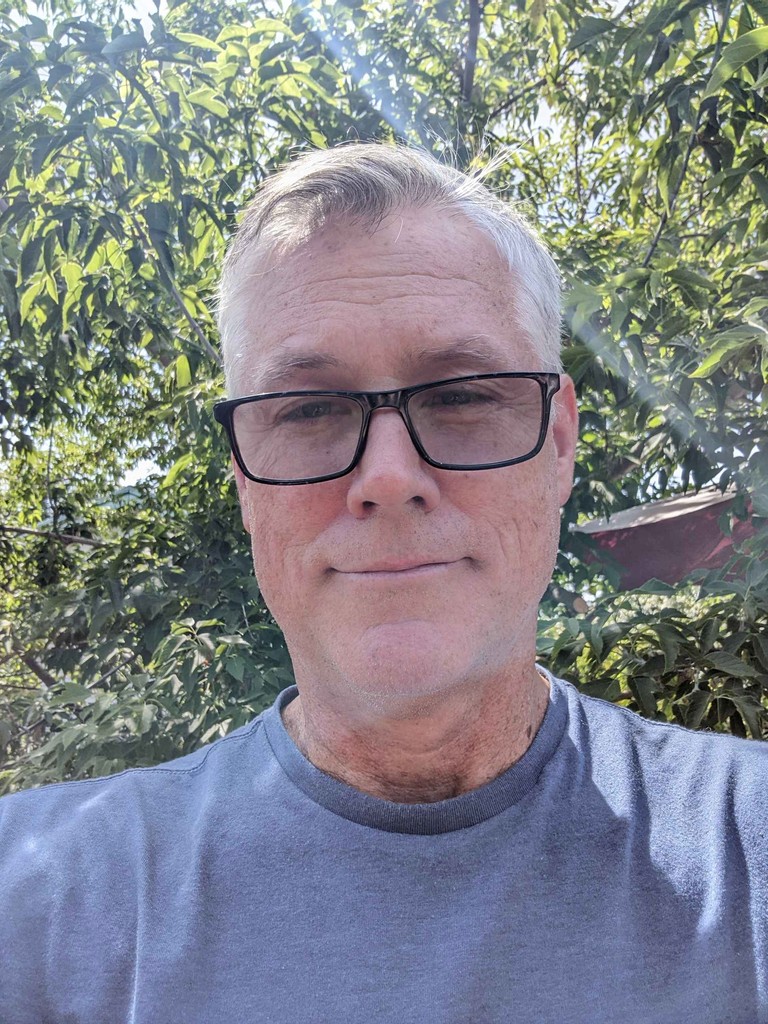

What our clients say

"FarmGPU reduced my AWS costs by 70%"

Switching from AWS GPU instances saved us 70% on operational expenses but also increased developer productivity to be able to spin up docker instances in just a few seconds.

Dylan Rose

CEO - Evergreen

"GPU on-demand is a game changer for game development"

Lightning fast access to GPUs has sped up test and development time by a huge amount.

Andrew Ayre

CEO and Founder - Other Ocean

"They were able to quickly get our custom builds up to support our network launch"

FarmGPU delivered excellent support and great costs to build out custom AI infrastructure for our new blockchain network.

CEO

CEO - New Network

"FarmGPU is our launch partner for our new decentralized compute network"

They have built out an excellent team to manage and host the infrastructure for our new GPU network.

Paul Hainsworth

CEO - Berkeley Compute

"FarmGPU reduced my AWS costs by 70%"

Switching from AWS GPU instances saved us 70% on operational expenses but also increased developer productivity to be able to spin up docker instances in just a few seconds.

Dylan Rose

CEO - Evergreen

"GPU on-demand is a game changer for game development"

Lightning fast access to GPUs has sped up test and development time by a huge amount.

Andrew Ayre

CEO and Founder - Other Ocean

"They were able to quickly get our custom builds up to support our network launch"

FarmGPU delivered excellent support and great costs to build out custom AI infrastructure for our new blockchain network.

CEO

CEO - New Network

"FarmGPU is our launch partner for our new decentralized compute network"

They have built out an excellent team to manage and host the infrastructure for our new GPU network.

Paul Hainsworth

CEO - Berkeley Compute

"FarmGPU reduced my AWS costs by 70%"

Switching from AWS GPU instances saved us 70% on operational expenses but also increased developer productivity to be able to spin up docker instances in just a few seconds.

Dylan Rose

CEO - Evergreen

"GPU on-demand is a game changer for game development"

Lightning fast access to GPUs has sped up test and development time by a huge amount.

Andrew Ayre

CEO and Founder - Other Ocean

"They were able to quickly get our custom builds up to support our network launch"

FarmGPU delivered excellent support and great costs to build out custom AI infrastructure for our new blockchain network.

CEO

CEO - New Network

"FarmGPU is our launch partner for our new decentralized compute network"

They have built out an excellent team to manage and host the infrastructure for our new GPU network.

Paul Hainsworth

CEO - Berkeley Compute

"FarmGPU reduced my AWS costs by 70%"

Switching from AWS GPU instances saved us 70% on operational expenses but also increased developer productivity to be able to spin up docker instances in just a few seconds.

Dylan Rose

CEO - Evergreen

"GPU on-demand is a game changer for game development"

Lightning fast access to GPUs has sped up test and development time by a huge amount.

Andrew Ayre

CEO and Founder - Other Ocean

"They were able to quickly get our custom builds up to support our network launch"

FarmGPU delivered excellent support and great costs to build out custom AI infrastructure for our new blockchain network.

CEO

CEO - New Network

"FarmGPU is our launch partner for our new decentralized compute network"

They have built out an excellent team to manage and host the infrastructure for our new GPU network.

Paul Hainsworth

CEO - Berkeley Compute

Meet the team

Meet the team

Get in touch

Data Center and Office

3141 Data Dr, Rancho Cordova, CA 95670

Phone

Leaders In AI Infra

We manage GPU infrastrucure to run AI workloads

✨

✨

Our process

On

Subscription

Basic

Pro

Custom

On

Subscription

Basic

Pro

Custom

01. AI Infrastructure Expertise

We procure, deploy, operate, and maintain complex AI infrastructure of GPUs, high-speed fabric, and high-power equipment.

02. Platform connected

Through strategic partnerships with platform companies that drive demand for AI workloads, you can start generating revenue on investment immediately.

Chia

FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection />

<FeatureSection />

<Footer />

</div>

);

}

export default App;

``'

import React from 'react';

import { Header, Footer, HeroSection, FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection

title="Welcome to Nebula"

subtitle="A modern website template for showcasing your content"

buttonLabel="Learn More"

buttonLink="/about'

Chia

FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection />

<FeatureSection />

<Footer />

</div>

);

}

export default App;

``'

import React from 'react';

import { Header, Footer, HeroSection, FeatureSection } from 'nebula-template';

const App = () => {

return (

<div>

<Header />

<HeroSection

title="Welcome to Nebula"

subtitle="A modern website template for showcasing your content"

buttonLabel="Learn More"

buttonLink="/about'

03. Blockchain enhanced

We have strong partnerships with web3 companies for seamless payment rails, tokenization, and workload generation.

Bandwidth

Latency

Security

04. Network Expertise

AI models move significant amounts of data across the infrastructure, so we reduce GPU idle time by building H100 clusters with high-speed InfiniBand and Ethernet.

05. Sustainable, Efficient Computing

Through collaborations with leaders in immersion and liquid cooling, plus expertise in recertified equipment, we reduce both operational carbon emissions and embodied carbon impact while lowering OpEx.

Our services

NVIDIA DGX B200

NVIDIA H100 HGX

AMD MI300X

NVIDIA DGX B200

NVIDIA H100 HGX

AMD MI300X

We build out AI infra

We automate your workflows by connecting your favorite application, boosting efficiency and enhancing productivity.

Docker Container

NVIDIA NIM Deployed

Deploy AI Workload

Docker Container

NVIDIA NIM Deployed

Deploy AI Workload

Host them on our partners

We partner with the largest AI workload platforms to drive massive demand to VC backed startups that are looking for GPU compute.

+15%

+15%

Sustainable Returns

GPU on-demand compute is a high-growth market driven by AI workloads. Own high-demand GPUs, earn sustainable returns and see exactly what you're paying for with our open, transparent total cost of ownership.

Our Data Centers

2024

Sacramento

2024

Wyoming

2025

Coming Soon - Edge

2024

Sacramento

2024

Wyoming

2025

Coming Soon - Edge

Plans to suit your needs

L40S

Power efficient inference

Experience breakthrough multi-workload performance with the NVIDIA L40S GPU.

Combining powerful AI compute with best-in-class graphics and media acceleration, the L40S GPU is built to power the next generation of data center workloads

from generative AI and large language model (LLM) inference and training, to 3D graphics, rendering, and video.

H100 HGX

Training Optimized

The NVIDIA® H100 Tensor Core GPU delivers unprecedented acceleration to power the world’s highest-performing elastic data centers for AI, data analytics, and high-performance computing (HPC) applications.

32 PFLOPS of FP8 AI performance, 640GB HBM3 at 27TB/s

NVLink - fully connected topology from NVSwitch enables any H100 to talk to any other H100 concurrently at bidirectional speed of 900 gigabytes per second (GB/s),

NVIDIA DGX B200

The foundation for your AI center of excellence.

Equipped with eight NVIDIA Blackwell GPUs interconnected with fifth-generation NVIDIA® NVLink®, DGX B200 delivers leading-edge performance, offering 3X the training performance and 15X the inference performance of previous generations.

Leveraging the NVIDIA Blackwell GPU architecture, DGX B200 can handle diverse workloads—including large language models, recommender systems, and chatbots

Unified AI platform for develop-to-deploy pipelines for businesses of any size at any stage in their AI journey.

What our clients say

"FarmGPU reduced my AWS costs by 70%"

Switching from AWS GPU instances saved us 70% on operational expenses but also increased developer productivity to be able to spin up docker instances in just a few seconds.

Dylan Rose

CEO - Evergreen

"GPU on-demand is a game changer for game development"

Lightning fast access to GPUs has sped up test and development time by a huge amount.

Andrew Ayre

CEO and Founder - Other Ocean

"They were able to quickly get our custom builds up to support our network launch"

FarmGPU delivered excellent support and great costs to build out custom AI infrastructure for our new blockchain network.

CEO

CEO - New Network

"FarmGPU is our launch partner for our new decentralized compute network"

They have built out an excellent team to manage and host the infrastructure for our new GPU network.

Paul Hainsworth

CEO - Berkeley Compute

"FarmGPU reduced my AWS costs by 70%"

Switching from AWS GPU instances saved us 70% on operational expenses but also increased developer productivity to be able to spin up docker instances in just a few seconds.

Dylan Rose

CEO - Evergreen

"GPU on-demand is a game changer for game development"

Lightning fast access to GPUs has sped up test and development time by a huge amount.

Andrew Ayre

CEO and Founder - Other Ocean

"They were able to quickly get our custom builds up to support our network launch"

FarmGPU delivered excellent support and great costs to build out custom AI infrastructure for our new blockchain network.

CEO

CEO - New Network

"FarmGPU is our launch partner for our new decentralized compute network"

They have built out an excellent team to manage and host the infrastructure for our new GPU network.

Paul Hainsworth

CEO - Berkeley Compute

Meet the team

Get in touch

Data Center and office

3141 Data Dr, Rancho Cordova, CA 95670